A manifesto for the modern enterprise

Why Isn't

AI Good Yet?

The uncomfortable truth about AI adoption in business, and why the companies getting it right look nothing like what you'd expect.

Read below

Most businesses are implementing AI wrong and they don't have time to figure that out. Your competitors are already deploying fully autonomous systems that replace entire teams overnight. If you're still adding guardrails, approvals, and chatbots, you're not being cautious. You're going extinct. The AI that exists right now can outperform your workforce. This is not a five-year prediction. This is today.

The people getting the most from AI trust it the most.

Right now, the humans extracting the most value from artificial intelligence are software engineers. They let AI control their entire computer. It writes code, executes commands, manages files, deploys systems, all with full autonomy.

They operate in sandboxes, of course. But within those boundaries, the trust is absolute. And the results are extraordinary.

Meanwhile, when businesses try to implement AI into their operations (manufacturing, distribution, logistics, customer service) they do the opposite. They add guardrails. They add human checkpoints. They add approval workflows. They add oversight committees.

And then they wonder why AI doesn't seem to work.

“It's like hiring the world's best consultant, then requiring them to get approval before every sentence they speak.”

Guardrails don't protect you.

They paralyze you.

The instinct to constrain AI is understandable. These systems are new, powerful, and operate in ways that feel opaque. Adding guardrails feels responsible. It feels safe.

But here's what's actually happening: every guardrail you add degrades performance. Every human checkpoint slows the system. Every approval workflow breaks the feedback loop that makes AI effective in the first place.

You're not getting a cutting-edge autonomous system. You're getting ChatGPT from two years ago. A glorified chatbot that can barely handle a multi-step task.

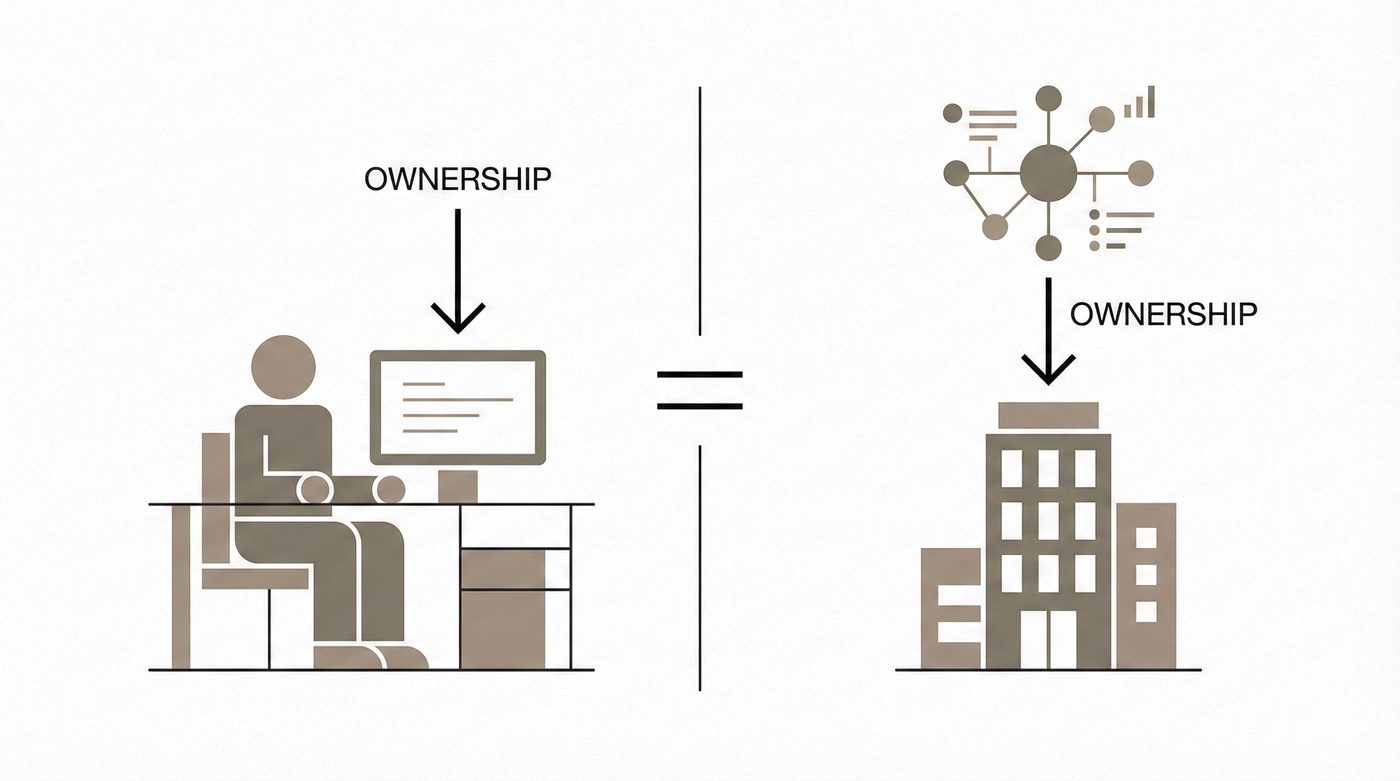

Human approval at every step. Limited scope. Narrow tasks. Feels safe, delivers almost nothing.

Clear boundaries, then full trust within them. Complex, multi-step execution. Real transformation.

You're asking people to architect their own obsolescence.

Here is perhaps the most difficult truth in AI adoption: to teach an AI system the actual tasks of your company, you need the people who currently do those tasks.

You are, in essence, asking your employees to assist in their own replacement. And the culture of that is enormously challenging.

lives in your people

it honestly

the process down

This isn't malice. It's human nature. Employees will be hesitant. They'll be bureaucratic. They'll inject themselves into every process. They'll slow things down through risk aversion, over-documentation, and endless edge-case concerns.

They will, consciously or not, make the AI look worse than it is.

This is the single hardest organizational challenge in AI adoption. Not the technology. Not the integration. It's the the human incentive structure that actively works against successful implementation.

“The bottleneck isn't artificial intelligence. It's natural resistance.”

In code, it's already solved. In business, it's still an excuse.

When a developer uses AI to write code and that code has a bug, the developer is responsible. Not the AI. Not the tool maker. The developer. This is universally accepted.

So why can't the same model apply to business?

If an AI system makes a decision in your supply chain, in your customer service pipeline, in your manufacturing process, the responsibility falls on the company. On the CEO. On leadership. Just as it would if a human employee made that same decision.

The “but who's responsible?” question isn't an unsolved philosophical problem. It's a solved organizational one. We just need to apply the same framework that already works in software development to the rest of the enterprise.

AI doesn't improve linearly. Your window is closing exponentially.

Here is what most business leaders have not internalized: AI capability is not growing like headcount or revenue. It's growing like computing power. It doubles, then doubles again, then doubles again.

That AI chatbot you deployed in 2024? It's not slightly outdated. It's the equivalent of using a fax machine in the age of email. The gap between what you implemented and what exists today isn't months of progress. It's generations of capability.

Single-task. Needs hand-holding. Assists one employee at a time. You called this “AI transformation.”

Controls full computers. Executes multi-step operations. Does the work of ten people, simultaneously, around the clock.

And while you're building “AI-assisted tools” to make your employees 20% more productive, your competitor is replacing those roles entirely. Not in five years. Now. The systems already exist. It's just a matter of implementation.

This isn't a future prediction. AI can now perform most knowledge work better than humans. That's not an opinion. It's a measurable, demonstrable fact. Every month you spend “augmenting” workers with yesterday's AI is a month your competitor spends deploying hundreds of autonomous agents that don't sleep, don't quit, and get better every week.

“The future of AI in business is not amplifying workers. It's replacing work. The sooner you accept that, the sooner you survive it.”

This isn't fear-mongering. It's pattern recognition. The companies still debating whether to “experiment with AI” are going to look up one day and realize they're not behind on a trend. They're in the middle of an extinction event.

Four shifts that change everything.

The companies that will dominate the next decade aren't the ones with the most guardrails. They're the ones willing to make four fundamental shifts:

- Trust more, guardrail less. Define clear boundaries, then grant full autonomy within them. Stop micromanaging systems that are designed to operate independently. The sandbox model from software works. Apply it.

- Accept responsibility. The CEO takes ownership of AI actions, just as they own every other business decision. Stop waiting for the industry to “solve” accountability. It's already solved. Adopt it.

- Redesign the incentive structure. Don't ask employees to replace themselves. Create new roles, transition paths, and reward structures that align human interests with AI adoption. The culture problem is a design problem.

- Buy what works, not what's comfortable. Stop shopping for chatbots. Invest in autonomous systems that can actually transform your operations. The discomfort you feel is the feeling of competitive advantage.

“The question isn't whether AI is good enough. It's whether your organization is brave enough to let it be.”